As AI workloads grow more demanding, GPUs are scaling up to match. NVIDIA’s latest GB300 GPU now features 288GB of high-bandwidth memory (HBM) — a leap from the GB200’s 192GB — enabling faster training and larger models.

To keep up, next-gen AI servers are adopting PCIe Gen6 and moving toward 200G PAM4 signaling per lane, pushing total bandwidth even higher.

Enter the NVIDIA ConnectX-8 SuperNIC — the world’s first 800G SmartNIC. It delivers up to 800 Gb/s over InfiniBand and 400 Gb/s over Ethernet, purpose-built for high-throughput AI clusters and HPC environments. Configuration 1Configuration 1

To support this bandwidth shift and prepare for what comes next, Infraeo is actively developing 800G & 1.6T copper and optical interconnects — engineered for the next generation of compute infrastructure.

In this blog, we cover:

- Introduction of the ConnectX-8 SuperNIC

- Key upgrades in ConnectX-8 vs. ConnectX-7

- Common deployment architectures and their connectivity requirements, and how Infraeo supports all deployments with DACs, ACCs, AOCs, and optical transceivers

For both Ethernet and InfiniBand environments, Infraeo offers a range of interconnect options designed to support full-bandwidth NVIDIA ConnectX-8 deployments.

What is ConnectX-8 SuperNIC?

ConnectX-8 is NVIDIA’s seventh-generation SmartNIC, engineered for next-generation AI clusters, hyperscale data centers, and high-performance computing (HPC) environments. It combines high-throughput networking with hardware-accelerated compute offload, enabling ultra-low latency and efficient data movement across distributed workloads. With support for both 400G and 800G Ethernet and InfiniBand, ConnectX-8 is optimized for demanding use cases such as AI model training, real-time inference, and distributed storage.

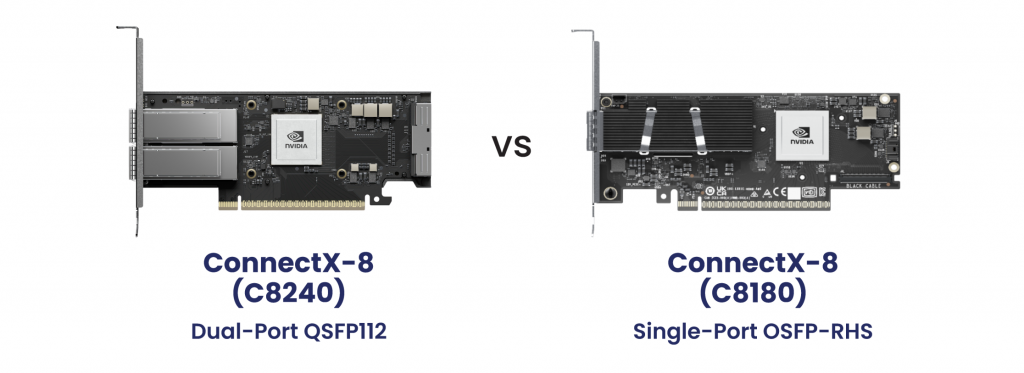

ConnectX-8 offers a variety of hardware packaging forms to adapt to different server/motherboard specifications. In terms of port type, it comes in two main versions, which this article will focus on: the single-port OSFP model (C8180) and the dual-port QSFP112 model (C8240). Both support Ethernet and InfiniBand and are designed for PCIe Gen6 interfaces.

- The C8180 features one OSFP cage, configurable as a full 800G link or two 400G links.

- The C8240 has two QSFP112 ports, each supporting up to 400G.

Choosing between the two depends on available switch ports, GPU topology, and required bandwidth per node. These models define the core configurations covered in the next section.

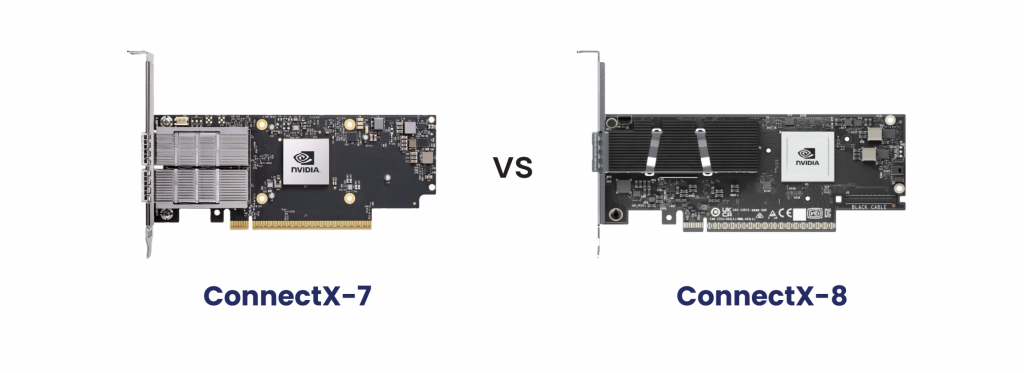

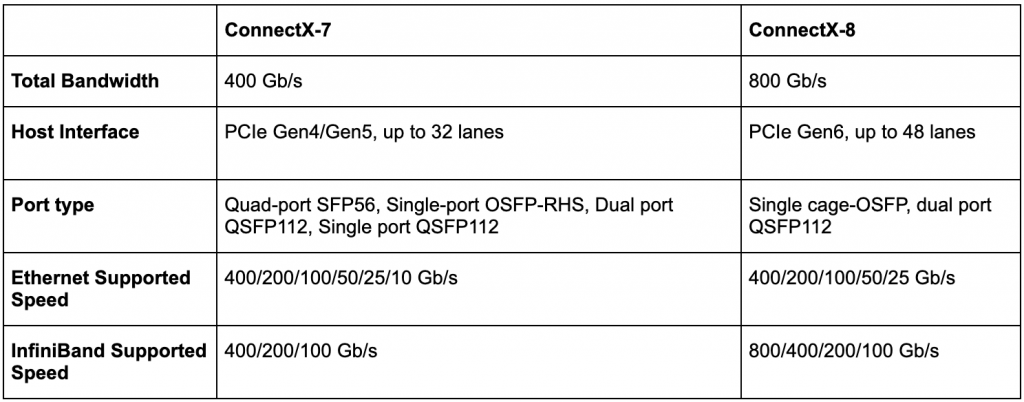

ConnectX-8 vs. ConnectX-7: What’s Changed and Why It Matters

ConnectX-8 is NVIDIA’s answer to the rising bandwidth demands brought on by PCIe Gen6, 200G/lane GPUs, and 800G switch architectures. It delivers double the data rate of ConnectX-7 and is purpose-built for next-gen AI infrastructure.

The upgrade to PCIe Gen6 removes host-side bottlenecks, allowing ConnectX-8 to fully align with the bandwidth of next-generation GPUs and switches. It also introduces 200G-per-lane PAM4 signaling — doubling the lane rate of ConnectX-7 and raising the bar for signal integrity and system design.

Connectivity solutions from ConnectX-7-era deployments, such as 400G QSFP112 and OSFP-RHS cables and modules, remain usable with ConnectX-8 in 400G configurations. ConnectX-8 is also backward compatible with 200G/100G rates, adapting to existing infrastructure. However, to unlock the full 800G potential of ConnectX-8, updated cabling and optics are required. ConnectX-8 is also positioned to support future 1.6T infrastructure, making it a forward-and-backward-compatible choice.

Common Connectivity Configurations and Solutions

ConnectX-8 NICs can be deployed in several configurations based on the network protocol (Ethernet or InfiniBand) and the switch port type. This section outlines the main Ethernet deployment scenarios and the corresponding connectivity options Infraeo offers to support each one.

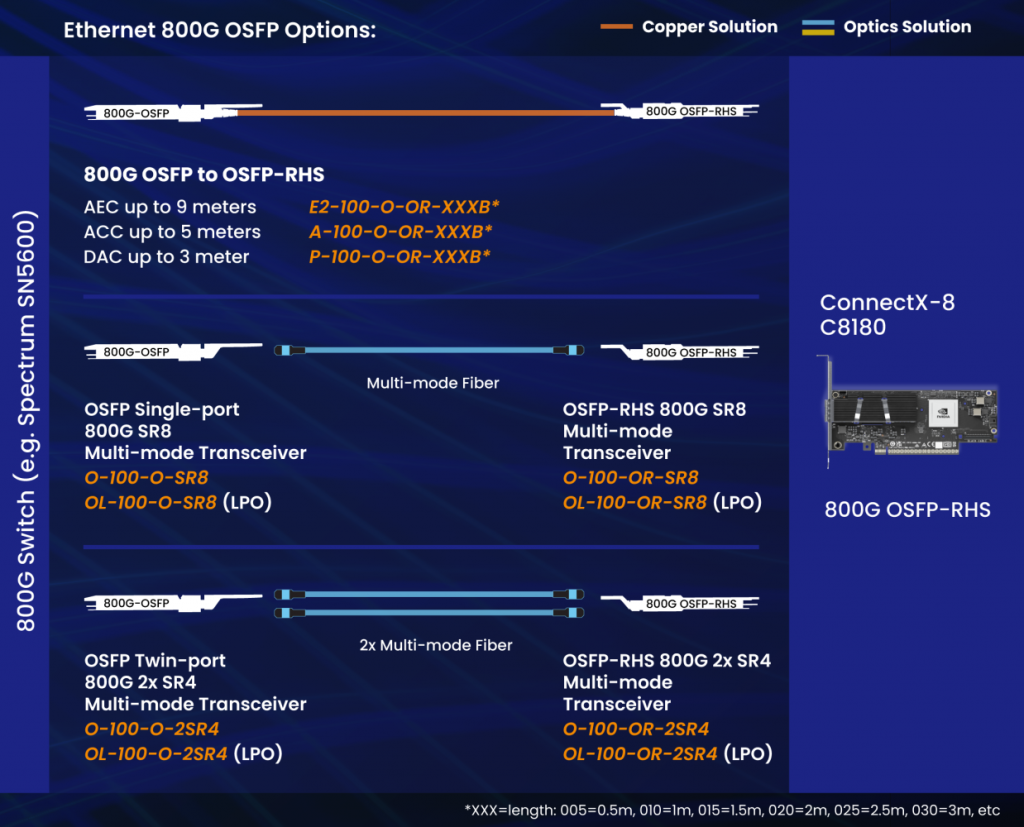

Configuration 1: 800G Ethernet — 1 800G Switch to 1 ConnectX-8 C8180

This setup connects an 800G OSFP port on an 800G Ethernet switch, such as the NVIDIA Spectrum SN5600, to the OSFP-RHS port on the ConnectX-8 C8180 NIC. It can run as a single 800G connection or as 2x400G. This is the most straightforward path when both ends support OSFP and 800G operation.

Infraeo offers straight-through OSFP to OSFP-RHS DACs, AECs, and ACCs for short-reach connections. On the optics side, Infraeo offers single-port and twin-port SR transceivers. Coming in OSFP and OSFP-RHS form factors to fit the connectivity needs on both the switch side and NIC side. Low-power LPO transceivers are also available for power-sensitive deployments. For more details, see our product offerings at https://www.infraeo.com/products/

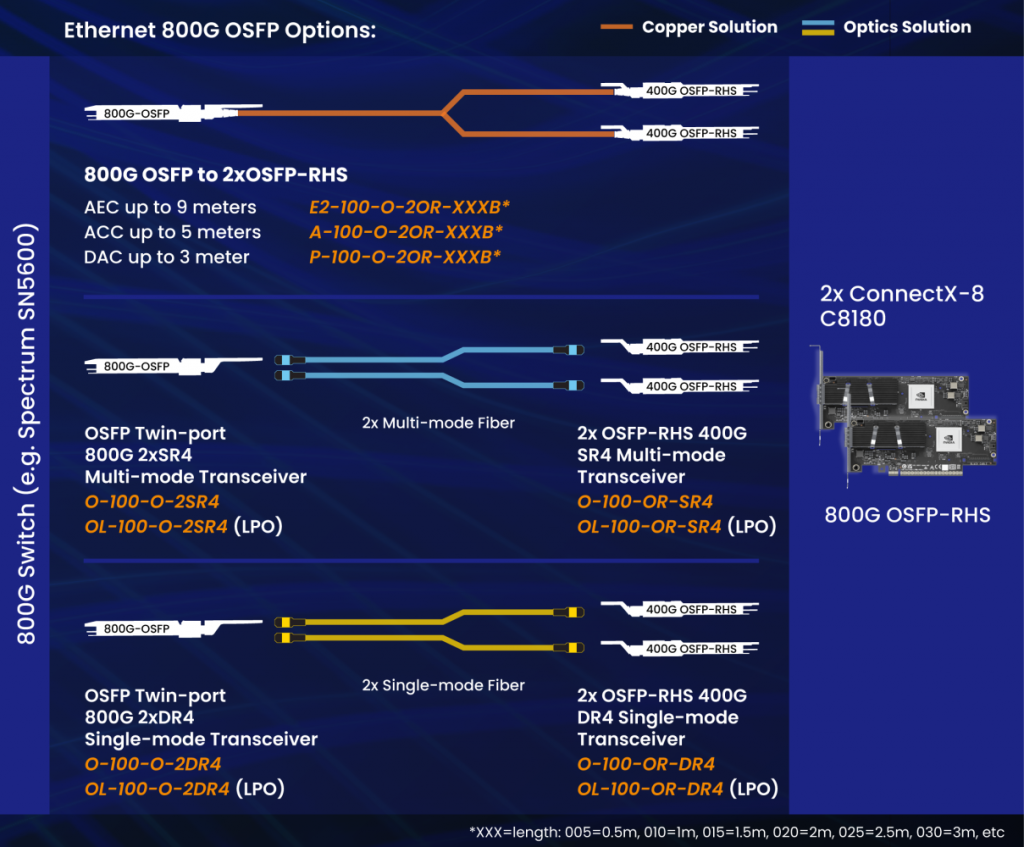

Configuration 2: 800G Ethernet — 1 800G Switch to 2 ConnectX-8 C8180

This setup uses one 800G OSFP port on an 800G Ethernet switch, such as the NVIDIA Spectrum SN5600 switch, and breaks it out into two 400G connections. Each 400G leg connects to a separate ConnectX-8 C8180 NIC, operating in 400G mode.

This approach is useful when using older GPU setups that require less communication throughput. This application helps to adapt to existing infrastructure, since the ConnectX-8 is backward compatible with 200G/100G rates.

Infraeo offers breakout OSFP to 2xOSFP-RHS DACs, AECs, and ACCs for short-reach connections. On the optics side, Infraeo offers single-port and twin-port SR and DR transceivers. Coming in OSFP and OSFP-RHS form factors to fit the connectivity needs on both the switch side and the NIC side. Our 800G OSFP-RHS transceivers can be throttled down to 400G by enabling only two ports. Low-power LPO transceivers are also available for power-sensitive deployments. For more details, see our product offerings at https://www.infraeo.com/products/

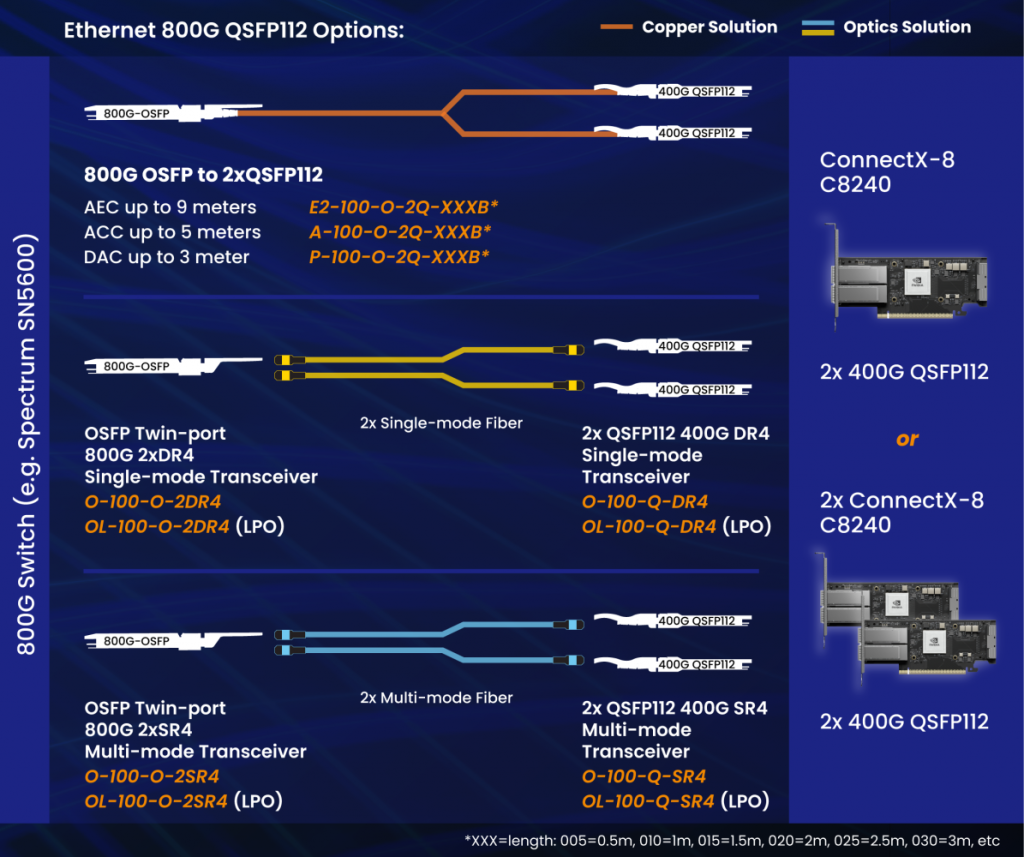

Ethernet — 800G Switch to QSFP112 ConnectX-8 (C8240)

This setup connects an 800G OSFP port on an 800G switch to the 2 QSFP112 ports on the ConnectX-8 C8240. Having two QSFP112 ports offers flexibility in connecting one QSFP112 module for a half-loaded 400G connection or connecting two QSFP112 modules to fill both ports and achieve an 800G connection.

Configuration 3: 800G Ethernet — 1 800G Switch to 1 ConnectX-8 C8240

In this setup, a single 800G OSFP port on the Spectrum SN5600 switch is broken out into two 400G QSFP112 connections. On the NIC side, these two modules are routed into both QSFP112 ports on a single ConnectX-8 C8240 NIC, enabling a full 800G connection across two cages.

Each QSFP112 port on the C8240 handles 400G, so this configuration fully utilizes the NIC’s bandwidth capacity. It’s a good option when using switches with 800G OSFP ports while deploying NICs built with dual QSFP112 interfaces.

Infraeo offers breakout 800G OSFP to 2xQSFP112 DACs, AECs, and ACCs for short-reach connections. On the optics side, Infraeo offers 800G and 400G twin-port SR and DR transceivers. Coming in OSFP and QSFP112 form factors to fit the connectivity needs on both the switch side and the NIC side. Low-power LPO transceivers are also available for power-sensitive deployments. For more details, see our product offerings at https://www.infraeo.com/products/

Configuration 4: 800G Ethernet — 1 800G Switch to 2 ConnectX-8 C8240

In this setup, one 800G OSFP port on an 800G switch is split into two 400G QSFP112 connections. Compared to configuration 3, each 400G leg connects to a separate ConnectX-8 C8240 NIC, using just one of the two available QSFP112 ports per NIC.

The connectivity product used in configuration 4 is the same as the one used in configuration 3, but here the two 400G ends go to different servers. It’s a common way to distribute bandwidth efficiently across two hosts, each running at 400G.

Infraeo’s 800G-to-2×400G breakout cables and 800G/400G OSFP/QSFP112 transceivers support both configurations 3 and 4. Whether both ends terminate at a single NIC or at two separate ones, the cabling and optics remain the same. For more details, see our product offerings at https://www.infraeo.com/products/

1.6T Configurations:

Today, most Ethernet deployments top out at 800G — the current Ethernet standards, IEEE 802.3df, support up to that level. That’s why even platforms like ConnectX-8 still run Ethernet connections at 400G on the NIC side, while connecting to 800G switches on the server side. The full jump to 1.6T will depend on the finalization of IEEE 802.3dj, which is still in progress.

Infraeo is preparing for both 800G and 1.6T Ethernet, with copper and optical solutions scheduled to roll out by the end of the year. No matter how the standard evolves, we’re already engineering what comes next.

Closing Thoughts

ConnectX-8 marks a major shift in AI infrastructure, doubling NIC-side bandwidth to 800G and aligning with PCIe Gen6, 200G-per-lane signaling, and the emerging 1.6T switch ecosystem. It supports both Ethernet and InfiniBand across OSFP and QSFP112 configurations, allowing flexible deployment across AI clusters and data centers.

Whether operating in 400G mode for legacy compatibility or scaling to full 800G for next-gen systems, CX8 demands high-quality, standards-aligned connectivity. Infraeo supports all key configurations with a full portfolio of DACs, ACCs, AOCs, loopbacks, and transceivers — engineered to meet the signal integrity, thermal, and breakout requirements of modern high-speed interconnects.

As the ecosystem moves toward 1.6T networking, Infraeo remains ready — with product lines designed to scale from 400G and 800G today to 1.6T by the end of the year.